Abstract

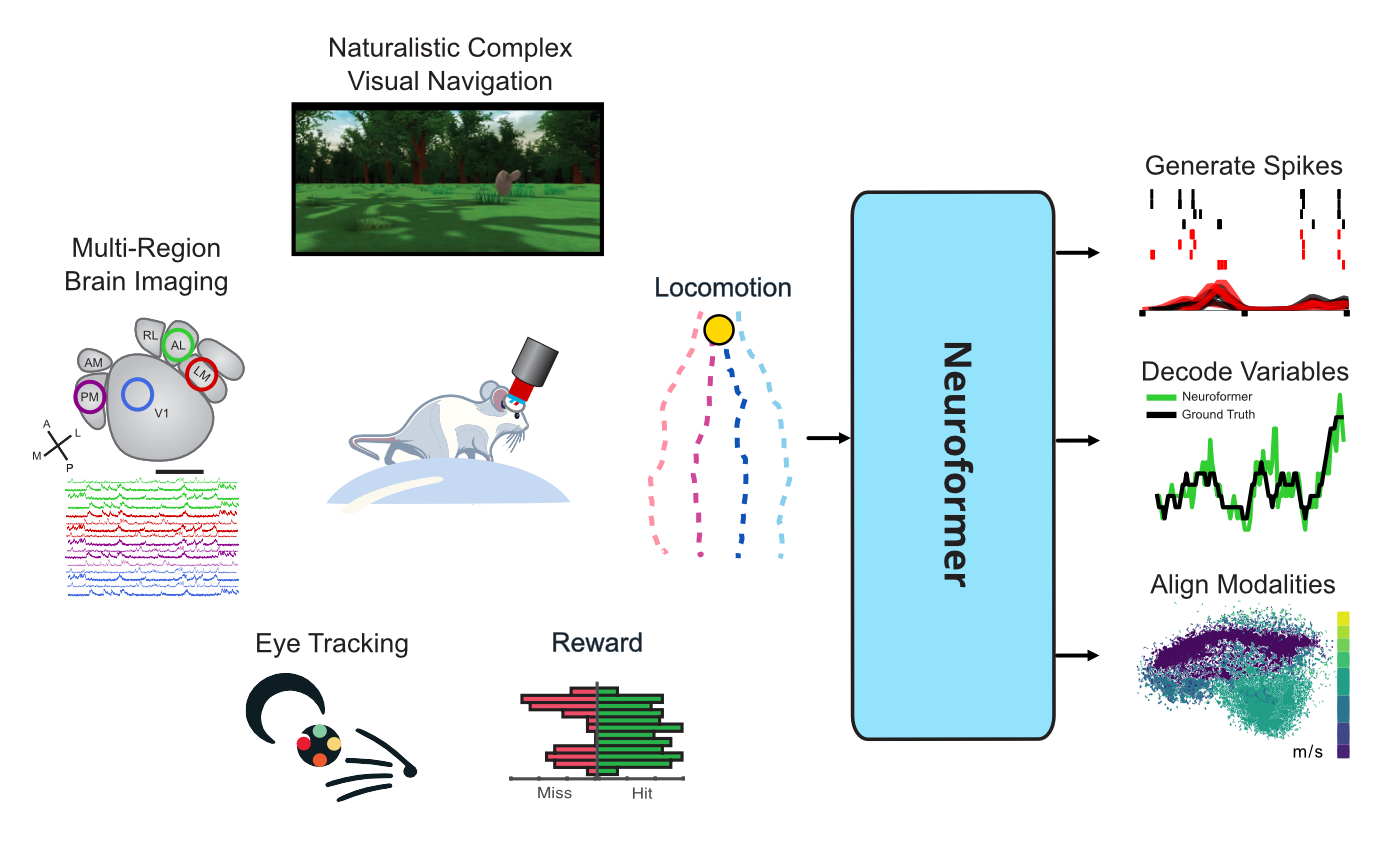

We present a method to generatively pretrain transformers on multimodal and multitask neuronal data, called Neuroformer. To achieve this, the Neuroformer harnesses a novel spatiotemporal tokenization scheme that models individual neurons as tokens. We first apply our model to synthetic

Our model can generate synthetic spiking data conditioned on varied stimuli, like video and reward, create useful embeddings using contrastive learning, and transfer to other downstream tasks like predicting behavior.

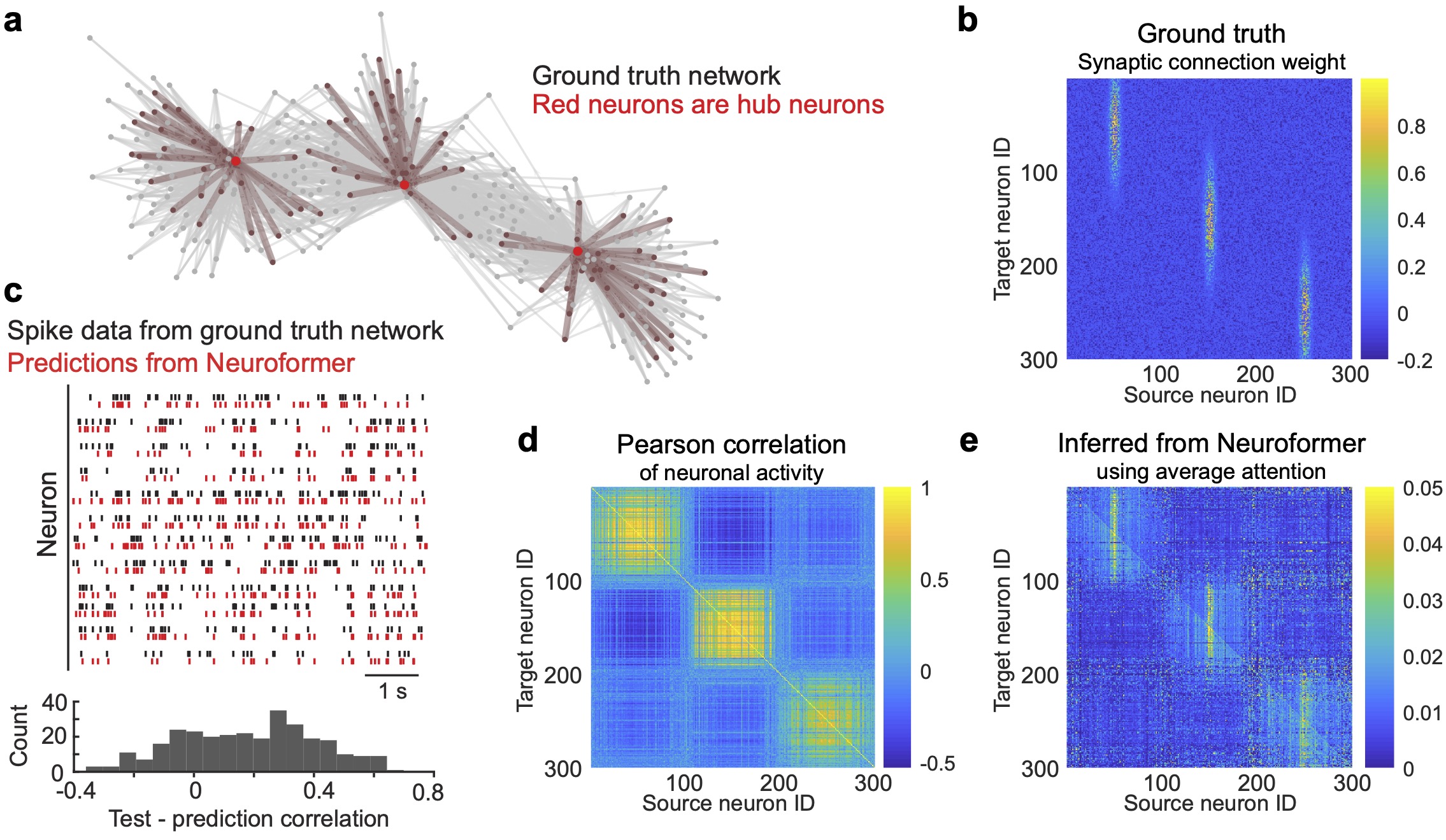

We first trained Neuroformer on simulated datasets, and found that it both accurately predicted simulated neuronal circuit activity, and also intrinsically inferred the underlying neural circuit connectivity, including direction. When pretrained to decode neural responses, the model predicted the behavior of a mouse with only few-shot fine-tuning, suggesting that the model begins learning how to do so directly from the neural pretraining objective, without any explicit supervision. We hope that Neuroformer can tap into the imagination of both neuroscientists and machine learning researchers, to push the limits of systems neuroscience data in terms of building better models of the brain and beyond!Attention Mirrors Hubb Connectivity

A uni-modal Neuroformer model (akin to a vanilla GPT) trained on simulated data with hub connectivity naturally revealed the underlying connectivity pattern within its self-attention.

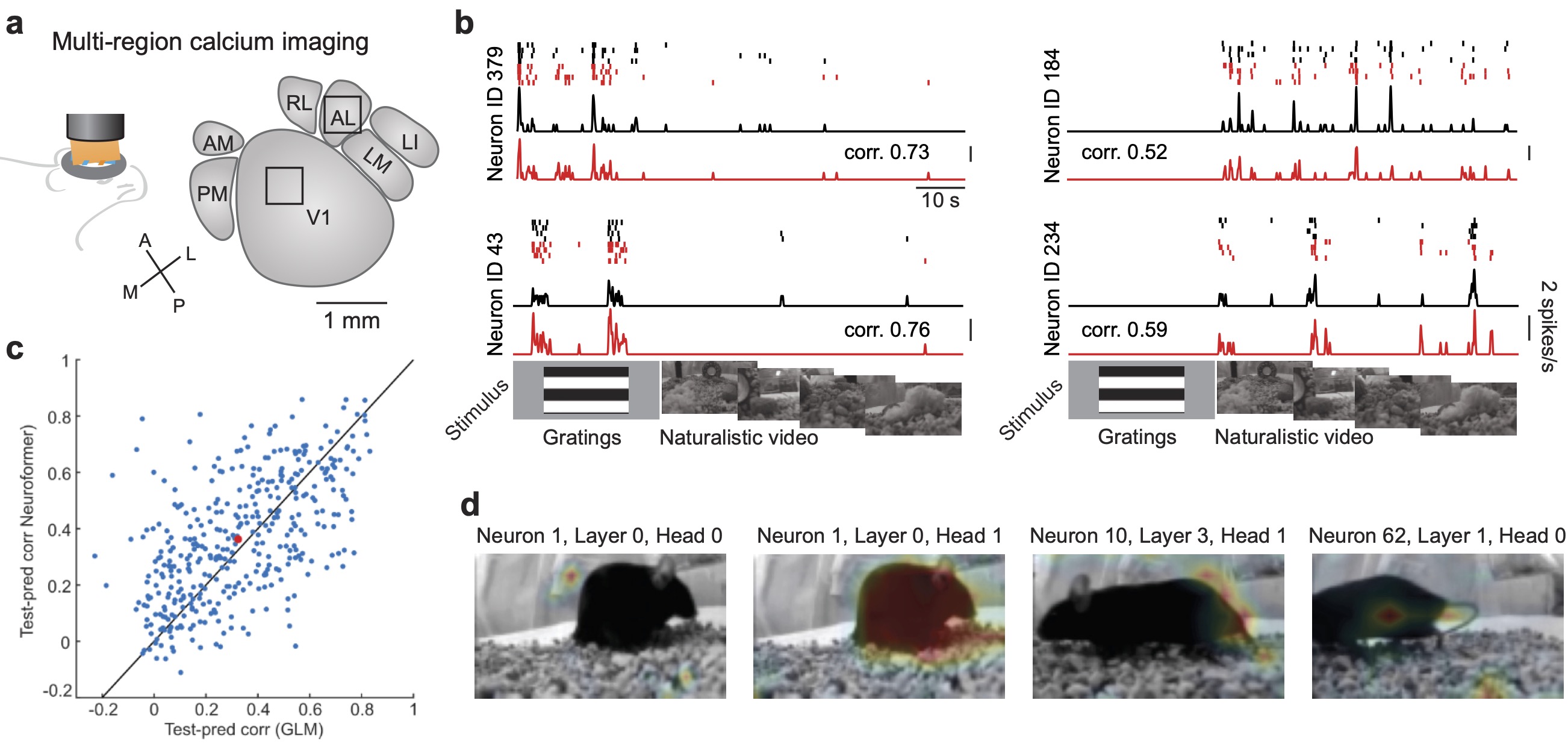

Visual Attention

Neuroformer's attention modules can attend to each neuron individually. Here we show the attention map over a visual scene from the point of view of a mouse, conditioned on its brain.

Simulations of Real Neural Activity Conidtioned on Multimodal Input

Generative spatiotemporal pretraining enables Neuroformer to generate synthetic neural activity conditioned on multimodal input, such as video and reward. This activity effectively captures the variability of the underlying neural circuit.

Simultaneous Multi-task Decoding

Joint multi-task training enables a single model to simultaneously decode speed and eye position.

Precise Speed Decoding that significantly outperforms conventional methods.

Contrastive Learning For Learning Multimodal Neural Latent Embeddings

Contrastive learning enables Neuroformer to learn useful embeddings of neural activity. Here we show 3-dimensional neural latent representations over time, colored by speed.

Easily Incorporate New Modalities

Easily customize your model's input modalities and decoding options by simply editing the configuration file. You can specify which modalities to use, whether to decode them or use them as input, and in which block to include them. For more details, visit our repo here.

Related Links

Other great concurrent works have also explored scaling neural data training using transformers. Neural Data Transformer 2: Multi-context Pretraining for Neural Spiking Activity, A Unified, Scalable Framework for Neural Population Decoding

In contrast to these works, Neuroformer:

- Leverages generative pretraining of neural data to learn from and predict neural responses, rather than only train to decode behavior from neural activity.

- Scale to complex multimodal inputs like video.

- Incorporate multimodal contrastive learning to align modalities and learn useful representations.

To cite the Neuroformer model paper:

@misc{antoniades2023neuroformer,

title={Neuroformer: Multimodal and Multitask Generative Pretraining for Brain Data},

author={Antonis Antoniades and Yiyi Yu and Joseph Canzano and William Wang and Spencer LaVere Smith},

year={2023},

eprint={2311.00136},

archivePrefix={arXiv},

primaryClass={q-bio.NC}

}To cite the V1AL data:

@article{YU20222810,

abstract = {Summary

The mouse visual cortex contains interconnected higher visual areas, but their functional specializations are unclear. Here, we used a data-driven approach to examine the representations of complex visual stimuli by L2/3 neurons across mouse higher visual areas, measured using large-field-of-view two-photon calcium imaging. Using specialized stimuli, we found higher fidelity representations of texture in area LM, compared to area AL. Complementarily, we found higher fidelity representations of motion in area AL, compared to area LM. We also observed this segregation of information in response to naturalistic videos. Finally, we explored how receptive field models of visual cortical neurons could produce the segregated representations of texture and motion we observed. These selective representations could aid in behaviors such as visually guided navigation.},

author = {Yiyi Yu and Jeffrey N. Stirman and Christopher R. Dorsett and Spencer L. Smith},

doi = {https://doi.org/10.1016/j.cub.2022.04.091},

issn = {0960-9822},

journal = {Current Biology},

keywords = {mouse higher visual areas, visual texture, visual motion, form-motion segregation, visual neuron model, calcium imaging, Gabor filter model, naturalistic video, visual edge density, mutual information},

number = {13},

pages = {2810-2820.e5},

title = {Selective representations of texture and motion in mouse higher visual areas},

url = {https://www.sciencedirect.com/science/article/pii/S0960982222007308},

volume = {32},

year = {2022},

bdsk-url-1 = {https://www.sciencedirect.com/science/article/pii/S0960982222007308},

bdsk-url-2 = {https://doi.org/10.1016/j.cub.2022.04.091}}